The term fake news is starting to become part of our everyday vocabulary.

It’s never been easier to tell the world what you think. You can do that by building an organic following on social media or you can pay to get your message out to billions of people.

Most of us are sending out positive and/or factual business-related content, but there’s a few that are creating fake news stories to trick people for monetary gain or to sway public opinion.

That is most prevalent on Facebook, which is why Facebook has come up with a two-question survey to help combat fake news.

Two questions Facebook ask to combat fake news

Facebook is randomly sampling users every day and asking them the following two questions:

Do you recognize the following websites?

- Yes

- No

How much do you trust each of these domains?

- Entirely

- A lot

- Somewhat

- Barely

- Not at all

As you may have already guessed, there has been an outcry from many saying that the survey is too basic and it can be gamed to make certain publishers appear untrustworthy.

Should publishers be worried?

Publishers should only be worried if they are posting fake news to mislead users for monetary gain.

I agree that the survey is simple, but that doesn’t mean it’s bad or useless.

Facebook has some of the most powerful machine learning algorithms in the world when it comes to social media, and I’m sure they already have procedures in place to stop people from gaming the survey.

It would be naive to think that they are only using these two questions to decide whether a publisher is pushing out fake news or not, and taking the survey at face value.

Rumors are that Facebook is taking into account a user’s habits, behaviors, demographic, and interests, which will be added into their overall score.

I would not be surprised if Facebook takes more from a brand’s page, such as the sentiment of comments posted and the makeup of fans.

How should publishers react to this update?

Whether you post fake news or not, this update is big news.

Facebook is starting off with a simple survey, but who’s to say that it won’t be adding extra buttons for users to report posts as fake news instead of spam, or putting your posts up for manual review if a page scores poorly.

This update should send a message to publishers to keep their content honest.

Publishers that write 100% legitimate news stories but use provocative headlines and images could damage the trust that readers have in their work.

BuzzFeed News is known for using clickbait-like titles and images to get clicks. Even if the news stories they cover are true, the fact that they are trying to add their own spin and influence other people’s thoughts may hurt their trust factor.

Rolling Stone, for the most part, post quality content and provide the reader with all the information beforehand:

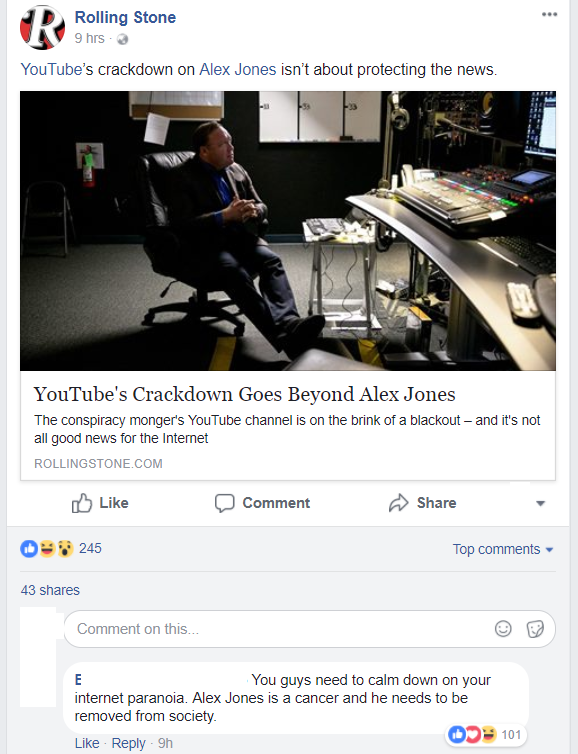

Then every 6-8 Facebook posts they post something a little more controversial, but nothing too far-fetched:

I think the Rolling Stone’s approach to dispensing content will be the way forward. The majority of your posts should be factual; sprinkle the odd post here and there that may be controversial to some people.

Facebook doesn’t want publishers spreading fake news or manipulating their users to think a specific way about a topic.

What do you think about Facebook’s two-question survey?

This is an interesting move by Facebook.

Maybe in the future Facebook will start giving publishers a ranking score of 1-5 for trust, or maybe they will use this data to stop pages from posting organic content, or remove them from the platform altogether.

We do not know what the future holds. What we do know is that publishers need to become honest and upfront and lead with the facts.